Generative adversarial networks (GANs) are neural networks that generate material, such as images, music, speech, or text, that is similar to what humans produce.

GANs have been an active topic of research in recent years. Facebook’s AI research director Yann LeCun called adversarial training “the most interesting idea in the last 10 years” in the field of machine learning. Below, you’ll learn how GANs work before implementing two generative models of your own.

In this tutorial, you’ll learn:

- What a generative model is and how it differs from a discriminative model

- How GANs are structured and trained

- How to build your own GAN using PyTorch

- How to train your GAN for practical applications using a GPU and PyTorch

Let’s get started!

Free Bonus: 5 Thoughts On Python Mastery, a free course for Python developers that shows you the roadmap and the mindset you'll need to take your Python skills to the next level.

What Are Generative Adversarial Networks?

Generative adversarial networks are machine learning systems that can learn to mimic a given distribution of data. They were first proposed in a 2014 NeurIPS paper by deep learning expert Ian Goodfellow and his colleagues.

GANs consist of two neural networks, one trained to generate data and the other trained to distinguish fake data from real data (hence the “adversarial” nature of the model). Although the idea of a structure to generate data isn’t new, when it comes to image and video generation, GANs have provided impressive results such as:

- Style transfer using CycleGAN, which can perform a number of convincing style transformations on images

- Generation of human faces with StyleGAN, as demonstrated on the website This Person Does Not Exist

Structures that generate data, including GANs, are considered generative models in contrast to the more widely studied discriminative models. Before diving into GANs, you’ll look at the differences between these two kinds of models.

Discriminative vs Generative Models

If you’ve studied neural networks, then most of the applications you’ve come across were likely implemented using discriminative models. Generative adversarial networks, on the other hand, are part of a different class of models known as generative models.

Discriminative models are those used for most supervised classification or regression problems. As an example of a classification problem, suppose you’d like to train a model to classify images of handwritten digits from 0 to 9. For that, you could use a labeled dataset containing images of handwritten digits and their associated labels indicating which digit each image represents.

During the training process, you’d use an algorithm to adjust the model’s parameters. The goal would be to minimize a loss function so that the model learns the probability distribution of the output given the input. After the training phase, you could use the model to classify a new handwritten digit image by estimating the most probable digit the input corresponds to, as illustrated in the figure below:

You can picture discriminative models for classification problems as blocks that use the training data to learn the boundaries between classes. They then use these boundaries to discriminate an input and predict its class. In mathematical terms, discriminative models learn the conditional probability P(y|x) of the output y given the input x.

Besides neural networks, other structures can be used as discriminative models such as logistic regression models and support vector machines (SVMs).

Generative models like GANs, however, are trained to describe how a dataset is generated in terms of a probabilistic model. By sampling from a generative model, you’re able to generate new data. While discriminative models are used for supervised learning, generative models are often used with unlabeled datasets and can be seen as a form of unsupervised learning.

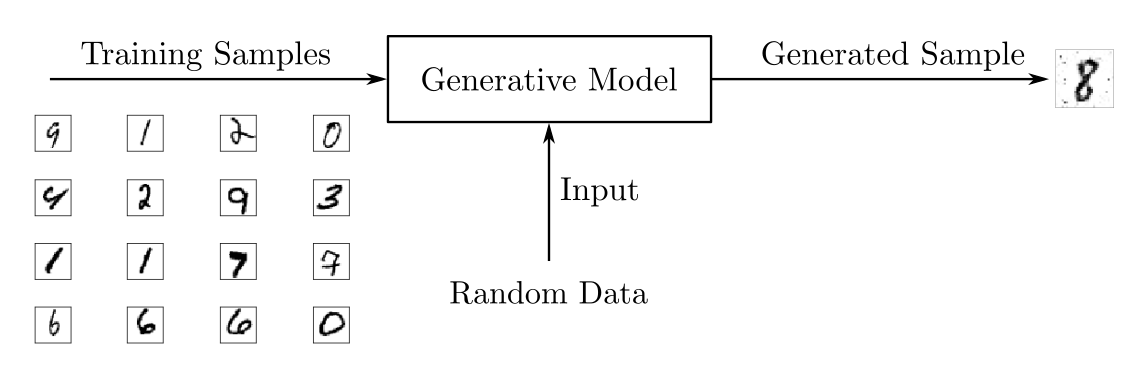

Using the dataset of handwritten digits, you could train a generative model to generate new digits. During the training phase, you’d use some algorithm to adjust the model’s parameters to minimize a loss function and learn the probability distribution of the training set. Then, with the model trained, you could generate new samples, as illustrated in the following figure:

To output new samples, generative models usually consider a stochastic, or random, element that influences the samples generated by the model. The random samples used to drive the generator are obtained from a latent space in which the vectors represent a kind of compressed form of the generated samples.

Unlike discriminative models, generative models learn the probability P(x) of the input data x, and by having the distribution of the input data, they’re able to generate new data instances.

Note: Generative models can also be used with labeled datasets. When they are, they’re trained to learn the probability P(x|y) of the input x given the output y. They can also be used for classification tasks, but in general, discriminative models perform better when it comes to classification.

You can find more information on the relative strengths and weaknesses of discriminative and generative classifiers in the article On Discriminative vs. Generative Classifiers: A comparison of logistic regression and naive Bayes.

Although GANs have received a lot of attention in recent years, they’re not the only architecture that can be used as a generative model. Besides GANs, there are various other generative model architectures such as:

Read the full article at https://realpython.com/generative-adversarial-networks/ »

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

from Real Python

read more

No comments:

Post a Comment