Ever found yourself wondering how to convert an image to SAM Coupé MODE 4 SCREEN$ format? No probably not, but I'm going to tell you anyway.

The SAM Coupé was a British 8 bit home computer that was pitched as a successor to the ZX Spectrum.

The high-color MODE4 mode could manage 256x192 resolution graphics, with 16 colors from a choice of 127. Each pixel can be set individually, rather than using PEN/PAPER attributes as on the Spectrum. But there's more. The SAM also supports line interrupts which allowed palette entries to be changed on particular scan lines: so a single palette entry can actually be used to display multiple colors.

The limitation that color can only be changed per line means it's not really useful for games, or other moving graphics. But it does allow you to use a completely separate palette for "off screen" elements like panels. For static images, such as photos, it's more useful - assuming that the distribution of color in the image is favorable.

tip: If you just want the converter, you can get it here. It is written in Python, using Pillow for image color conversion.

First a quick look at the SAM Coupé screen modes to see what we're dealing with.

Sam Coupe Screen Modes

There are 4 screen modes on the SAM Coupé.

- MODE 1 is the ZX Spectrum compatible mode, with 8x8 blocks which can contain 2 colors PAPER (background) and PEN (foreground). The framebuffer in MODE 1 is non-linear, in that line 1 is followed by line 8.

- MODE 2 also uses attributes, with PAPER and PEN, but the cells are 8x1 pixels and the framebuffer is linear. This MODE wasn't used a great deal.

- MODE 3 is high resolution, with double the X pixels but only 4 colours -- making it good for reading text.

- MODE 4 is the high color mode, with 256x192 and independent coloring of every pixel from a palette of 16. Most games/software used this mode.

| Mode |

Dimensions |

Framebuffer |

bpp |

Colors |

Size |

Notes |

| 4 |

256×192 |

linear |

4 |

16 |

24 KB |

High color |

| 3 |

512×192 |

linear |

2 |

4 |

24 KB |

High resolution |

| 2 |

256×192 |

linear |

1 |

16 |

12 KB |

Color attributes for each 8x1 block |

| 1 |

256×192 |

non-linear |

1 |

16 |

6.75KB |

Color attributes for each 8×8 block; matches ZX Spectrum |

Most SAM Coupe SCREEN$ were in MODE 4, so that's what we'll be targeting. It would be relatively easy to support MODE 3 on top of this.

The format itself is fairly simple, consisting of the following bytes.

| Bytes |

Content |

| 24576 |

Pixel data, Mode 4 4bpp: 1 byte=2 pixels; Mode 3 2bpp: 1 byte = 3 pixels |

| 16 |

Mode 4 Palette A |

| 4 |

Mode 3 Palette A store |

| 16 |

Mode 4 Palette B |

| 4 |

Mode 3 Palette B store |

| Variable |

Line interrupts 4 bytes per interrupt (see below) |

| 1 |

FF termination byte |

In MODE 4 the pixel data is 4bbp, that is 1 byte = 2 pixels (16 possible colors). To handle this we can create our image as 16 colors and bit-shift the values before packing adjacent pixels into a single byte.

Palette A & B

As shown in the table above the SAM actually supports two simultaneous palettes (here marked A & B). These are full palettes which are alternated between, by default 3 times per second, to create flashing effects. The entire palette is switched, but you can opt to only change a single color. The rate of flashing is configurable with:

POKE &5A08, <value>

The <value> is the time between swaps of alternate palettes, in 50ths of a second. This is only generally useful for creating flashing cursor effects . For converting to SAM SCREEN$ we'll be ignoring this and just duplicating the palette.

note: The exporter supports palette flash for GIF export.

MODE 3 Store

When switching between MODE 3 and MODE 4. The palettes of MODE 3 & 4 are separate, but palette operations on the same CLUT. When changing mode 4 colors are aside to a temporary store, and replaced when switching back. These values are also saved when saving SCREEN$ files (see "store" entries above), so you can replace the MODE 3 palette by loading a MODE 4 screen. It's a bit odd.

We can ignore this for our conversions and just write a default set of bytes.

Interrupts

Interrupts define locations on the screen where a given palette entry (0-15) changes to a different color from the 127 system palette. They are encoded with 4 bytes per interrupt, with multiple interrupts appended one after another.

| Bytes |

Content |

| 1 |

Y position, stored as 172-y (see below) |

| 1 |

Color to change |

| 1 |

Palette A |

| 1 |

Palette B |

Interrupt coordinates set from BASIC are calculated from -18 up to 172 at the top of the screen. The plot range in BASIC is actually 0..173, but interrupts can't affect the first pixel (which makes sense, since this is handled through the main palette).

When stored in the file, line interrupts are stored as 172-y. For example, a line interrupt at 150 is stored in the file as 22. The line interrupt nearest the top of the screen (1st row down, interrupt position 173) would be stored as 172-172=0.

This sounds complicated, but actually means that to get our interrupt Y byte we can just subtract 1 from the Y coordinate in the image.

Converting Image to SCREEN$

We now have all the information we need to convert an image into a SCREEN$ format. The tricky bit (and what takes most of the work) is optimising the placement of the interrupts to maximise the number of colors in the image.

Pre-processing

Processing is done using Pillow package for Python. Input images are resized and cropped to fit, using using the ImageOps.fit() method, with centering.

SAM_COUPE_MODE4 = (256, 192, 16)

WIDTH, HEIGHT, MAX_COLORS = SAM_COUPE_MODE4

im = Image.open(fn)

# Resize with crop to fit.

im = ImageOps.fit(im, (WIDTH, HEIGHT), Image.ANTIALIAS, 0, (0.5, 0.5))

If the above crop is bad, you can adjust it by pre-cropping/sizing the image beforehand. There isn't the option to shrink without cropping as any border area would waste a palette entry to fill the blank space.

Interrupts

This is the bulk of the process for generating optimized images: the optimize method is shown below -- this shows the high level steps taken to reach optimal number of colors using interrupts to compress colors.

def optimize(im, max_colors, total_n_colors):

"""

Attempts to optimize the number of colors in the screen using interrupts. The

result is a dictionary of color regions, keyed by color number

"""

optimal_n_colors = max_colors

optimal_color_regions = {}

optimal_total_interrupts = 0

for n_colors in range(max_colors, total_n_colors+1):

# Identify color regions.

color_regions = calculate_color_regions(im, n_colors)

# Compress non-overlapping colors together.

color_regions = compress_non_overlapping(color_regions)

# Simplify our color regions.

color_regions = simplify(color_regions)

total_colors = len(color_regions)

# Calculate home many interrupts we're using, length drop initial.

_, interrupts = split_initial_colors(color_regions)

total_interrupts = n_interrupts(interrupts)

print("- trying %d colors, with interrupts uses %d colors & %d interrupts" % (n_colors, total_colors, total_interrupts))

if total_colors <= max_colors and total_interrupts <= MAX_INTERRUPTS:

optimal_n_colors = n_colors

optimal_color_regions = color_regions

optimal_total_interrupts = total_interrupts

continue

break

print("Optimized to %d colors with %d interrupts (using %d palette slots)" % (optimal_n_colors, optimal_total_interrupts, len(optimal_color_regions)))

return optimal_n_colors, optimal_color_regions

The method accepts the image to compress, a max_colors argument, which is the number of colors supported by the screen mode (16). This is a lower bound, the minimum number of colors we should be able to get in the image. The argument total_n_colors contains the total number of colors in the image, capped at 127 -- the number of colors in the SAM palette. This is the upper bound, the maximum number of colors we can use. If the total_n_colors < 16 we'll skip optimization.

Each optimization round is as follows -

calculate_color_regions generates a dictionary of color regions in the image. Each region is a (start, end) tuple of y positions in the image where a particular color is found. Each color will usually have many blocks.compress_non_overlapping takes colors with few blocks and tries to combine them with other colors with no overlapping regions: transitions between colors will be handled by interruptssimplify takes the resulting color regions and tries to simplify them further, grouping blocks back with their own colors if they can and then combining adjacent blockstotal_colors the length of the color_regions is now the number of colors usedsplit_initial_colors removes the first block, to get total number of interrupts

note: The compress_non_overlapping algorithm makes no effort to find the best compression of regions - I experimented with this a bit and it just explodes the number of interrupts for little real gain in image quality.

The optimization process is brute force - step forward, increase the number of colors by 1 and perform the optimization steps above. If the number of colors > 16 we've gone too far: we return the last successful result, with colors <= 16.

SAM Coupé Palette

Once we have the colors for the image we map the image over to the SAM Coupé palette. Every pixel in the image must have a value between 0-15 -- pixels for colors controlled by interrupts are mapped to their "parent" color. Finally, all the colors are mapped across from their RGB values to the nearest SAM palette number equivalent.

note: This is sub-optimal, since the choice of colors should really be informed by the colors available. But I couldn't find a way to get Pillow to quantize to a fixed palette without dithering.

The mapping is done by calculating the distance in RGB space for each color to each color in the SAM 127 color palette, using the usual RGB color distance algorithm.

def convert_image_to_sam_palette(image, colors=16):

new_palette = []

rgb = image.getpalette()[:colors*3]

for r, g, b in zip(rgb[::3], rgb[1::3], rgb[2::3]):

def distance_to_color(o):

return distance(o, (r, g, b))

spalette = sorted(SAM_PALETTE, key=distance_to_color)

new_palette.append(spalette[0])

palette = [c for i in new_palette for c in i]

image.putpalette(palette)

return image

Packing bits

Now our image contains pixels of values 0-15 we can pack the bits and export the data. we can iterate through the flattened data in steps of 2, and pack into a single byte:

pixels = np.array(image16)

image_data = []

pixel_data = pixels.flatten()

# Generate bytestream and palette; pack to 2 pixels/byte.

for a, b in zip(pixel_data[::2], pixel_data[1::2]):

byte = (a << 4) | b

image_data.append(byte)

image_data = bytearray(image_data)

The operation a << 4 shifts the bits of integer a left by 4, so 15 (00001111) becomes 240 (11110000), while | ORs the result with b. If a = 0100 and b = 0011 the result would be 01000011 with both values packed into a single byte.

Writing the SCREEN$

Finally, the image data is written out, along with the palette data and line interrupts.

# Additional 4 bytes 0, 17, 34, 127; mode 3 temporary store.

bytes4 = b'\x00\x11\x22\x7F'

with open(outfile, 'wb') as f:

f.write(image_data)

# Write palette.

f.write(palette)

# Write extra bytes (4 bytes, 2nd palette, 4 bytes)

f.write(bytes4)

f.write(palette)

f.write(bytes4)

# Write line interrupts

f.write(interrupts)

# Write final byte.

f.write(b'\xff')

To actually view the result, I recommend the SAM Coupé Advanced Disk Manager.

You can see the source code for the img2sam converter on Github.

Examples

Below are some example images, converted from PNG/JPG source images to SAM Coupé MODE 4 SCREEN$ and then back into PNGs for display. The palette of each image is restricted to the SAM Coupé's 127 colors and colors are modified using interrupts.

Pool 16 colors, no interrupts

Pool 16 colors, no interrupts

Pool 24 colors, 12 interrupts (compare gradients)

Pool 24 colors, 12 interrupts (compare gradients)

This image pair shows the effect on line interrupts on a image without dither. The separation between the differently colored pool balls makes this a good candidate.

Leia 26 colors, 15 interrupts

Leia 26 colors, 15 interrupts

Tully 22 colors, 15 interrupts

Tully 22 colors, 15 interrupts

The separation between the helmet (blue, yellow components) and horizontal line in the background make this work out nicely. Same for the second image of Tully below.

Isla 18 colors, 6 interrupts

Isla 18 colors, 6 interrupts

Tully (2) 18 colors, 5 interrupts

Tully (2) 18 colors, 5 interrupts

Dana 17 colors, 2 interrupts

Dana 17 colors, 2 interrupts

Lots of images that don't compress well because the same shades are used throughout the image. This is made worse by the conversion to the SAM's limited palette of 127.

Interstellar 17 colors, 3 interrupts

Interstellar 17 colors, 3 interrupts

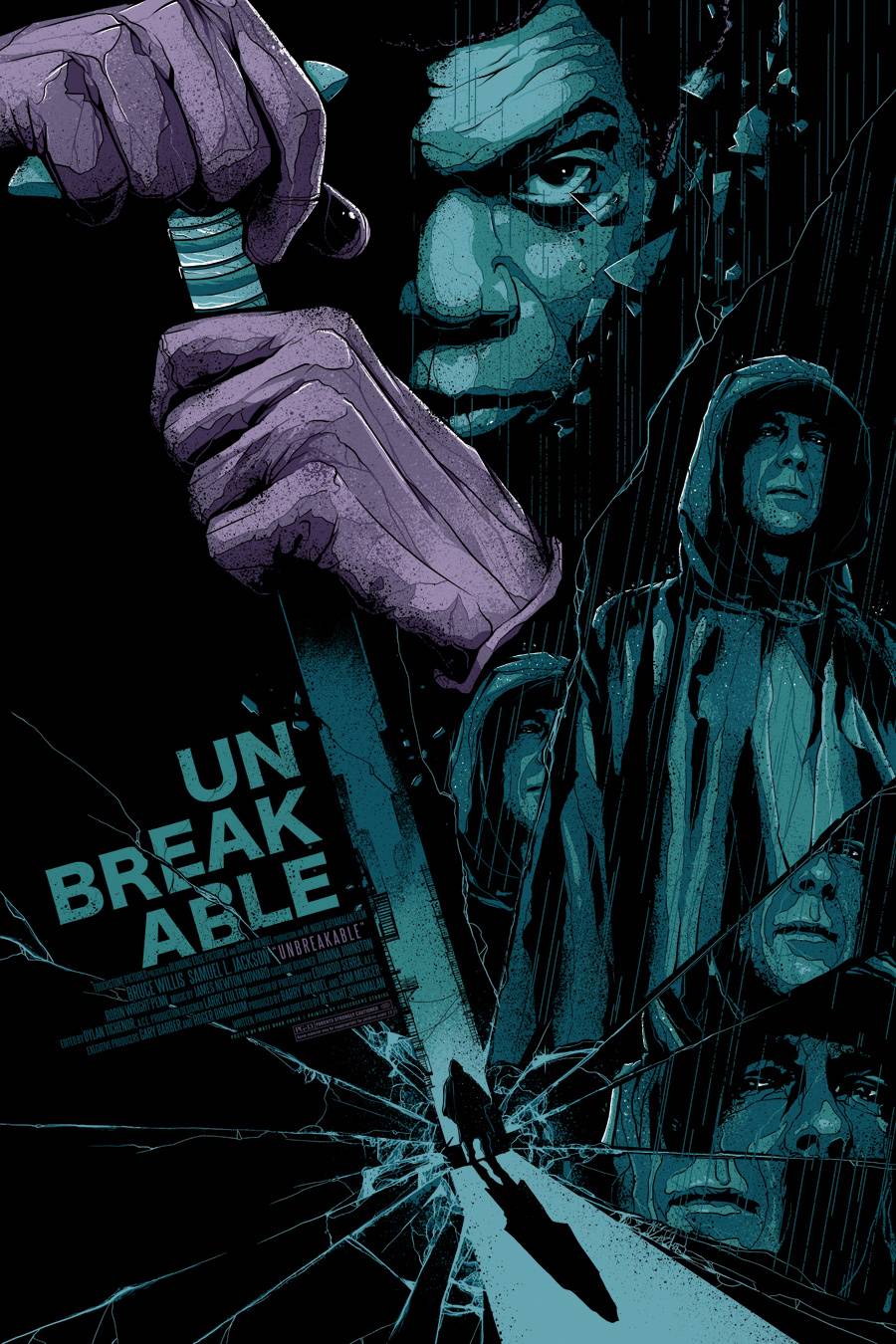

Blade Runner 16 colors (11 used), 18 interrupts

Blade Runner 16 colors (11 used), 18 interrupts

This last image doesn't manage to squeeze more than 16 colors out of the image, but does reduce the number of colors used for those 16 to just 11. This gives you 5 spare colors to add something else to the image.

Converting SCREEN$ to Image

Included in the scrimage package is the sam2img converter, which will take a SAM MODE4 SCREEN$ and convert it to an image. The conversion process respects interrupts and when exporting to GIF will export flashing palettes as animations.

The images above were all created using sam2img on SCREEN$ created with img2sam. The following two GIFs are examples of export from SAM Coupe SCREEN$ with flashing palettes.

Flashing palette

Flashing palette

Flashing palette and flashing Line interrupts

Flashing palette and flashing Line interrupts

You can see the source code for the sam2img converter on Github.

from Planet Python

via

read more

Pool 16 colors, no interrupts

Pool 16 colors, no interrupts Pool 24 colors, 12 interrupts (compare gradients)

Pool 24 colors, 12 interrupts (compare gradients) Leia 26 colors, 15 interrupts

Leia 26 colors, 15 interrupts Tully 22 colors, 15 interrupts

Tully 22 colors, 15 interrupts Isla 18 colors, 6 interrupts

Isla 18 colors, 6 interrupts Tully (2) 18 colors, 5 interrupts

Tully (2) 18 colors, 5 interrupts Dana 17 colors, 2 interrupts

Dana 17 colors, 2 interrupts Interstellar 17 colors, 3 interrupts

Interstellar 17 colors, 3 interrupts Blade Runner 16 colors (11 used), 18 interrupts

Blade Runner 16 colors (11 used), 18 interrupts Flashing palette

Flashing palette Flashing palette and flashing Line interrupts

Flashing palette and flashing Line interrupts